Landscape Survey with Extension and Aglnnovation Leaders

Quantitative Method

Authors

Aaron Weibe, Ph.D.

Director of Technology Services & Communications

Extension Foundation

aaronweibe@extension.org

David Warren

Senior Director of Integrated Digital Strategies

Oklahoma State University & Extension Foundation

davidwarren@extension.org

Dhruti Patel, Ed.D.

Senior Agent, Family & Consumer Sciences

University of Maryland Extension

dhrutip@umd.edu

Mark Locklear

Digital Systems & IT Operations Manager

Extension Foundation

marklocklear@extension.org

Acknowledgments and Appreciation

The Extension Foundation extends its sincere gratitude to the leaders, partners, and facilitators whose dedication made this work possible.

Dr. Damona Doye

Associate Vice President, Oklahoma Cooperative Extension Service, Oklahoma State University

Dr. Alton Thompson

Executive Director, Association of 1890 Research and Extension Administrators (AERA), based at North Carolina A&T State University

Dr. Beverly Coberly

Chief Executive Officer, Extension Foundation

Bill Hoffman and the Extension Committee on Organization and Policy (ECOP)

David Warren

Oklahoma State University & Extension Foundation

Dr. Aaron Weibe

Extension Foundation

Dr. Dhruti Patel

University of Maryland Extension

Mark Locklear

Extension Foundation

Ashley Griffin

Chief Operating Officer, Extension Foundation and the New Technologies for Ag Extension (NTAE) program

University of New Hampshire Extension

Extension Foundation Staff and AI Advisory Board Members

For serving as facilitators and moderators during the focus group sessions, ensuring productive and forward-focused dialogue throughout Parts II and III of the Convening.

agInnovation and Extension Directors and Administrators

For their time, thoughtful engagement, and contributions to shaping the national conversation on AI readiness, strategy, andimplementation across the Land-grant system.

Regional Extension and agInnovation Directors and Administrators

USDA National Institute of Food and Agriculture

This report is supported in part by New Technologies for Ag Extension (funding opportunity no. USDA-NIFA-OP-010186), grant no. 2023-41595-41325 from the USDA National Institute of Food and Agriculture. Any opinions, findings, conclusions, or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the view of the U.S. Department of Agriculture.

Artificial intelligence (AI) represents a defining technological shift that is rapidly influencing how Land-grant Universities fulfill their public missions of research, education, and community service. Across both Cooperative Extension and agInnovation (Research) systems, AI holds the potential to enhance how science is generated, translated, and applied, improving accuracy, efficiency, and availability, while also introducing responsibilities for governance, workforce shifts, data safety, transparency, and ethical use.

Recognizing this pivotal moment, the Extension Foundation (EXF), in collaboration with the University of New Hampshire Extension and supported through the New Technologies for Ag Extension (NTAE) cooperative agreement with USDA’s National Institute for Food and Agriculture (NIFA), led a national effort to understand the current landscape of AI within both Extension and Research components of the Land-grant system. Contributing members included the Extension Foundation, Oklahoma State University Extension, and University of Maryland Extension.

This effort built upon current EXF initiatives that had already begun exploring practical AI applications for the system, including tools such as ExtensionBot and the MERLIN data management platform, both of which demonstrate early-stage models of AI-enabled information discovery and decision support.

The purpose of this study was to move beyond early exploration and toward a coordinated, leadershipdriven framework for AI integration across Extension and agInnovation networks. Through a combination of survey research and facilitated convenings, the study examined institutional readiness, workforce development needs, policy structures, and leadership perspectives on responsible AI use across both missions.

Leaders across the Land-grant system identified three overarching priorities that define how Extension and Research should guide AI adoption nationally:

These priorities establish the foundation for the next phase of AI leadership across the Land-grant system, ensuring that new technologies reinforce, rather than replace, the human judgment and trust that define both the Research and Extension missions and serve as a trusted community partner in disseminating AI ethically.

To explore these priorities in depth, the study was organized in four sequential phases. It began with a national AI Landscape Assessment to establish a baseline understanding of readiness and current use across institutions. This was followed by three AI Convenings, one virtual and two in person, that engaged both Extension and Research Directors and Administrators in identifying priorities, validating findings, and developing actionable implementation strategies. The following sections outline the timeline of activities, the methods used, and the findings that emerged through this coordinated process.

System snapshot: a national baseline of AI awareness, use cases, and readiness across CES and agInnovation.

The study took place over a concentrated four-month period from June through September 2025. Each phase was designed to build upon the previous one, progressing from environmental scan to structured dialogue to consensus on leadership priorities and implementation strategies.The process intentionally combined the perspectives of both Extension and agInnovation leadership to ensure alignment across Extension and agInnovation.

This timeline reflects a deliberate design. The initial landscape assessment provided a system-wide view of readiness, the virtual convening established shared current perspectives, and the two in-person convenings allowed leaders to establish priorities and co-create strategies for ethical governance, workforce training, and long-term infrastructure development. The sequence positioned the Land-grant system to move from awareness to coordinated priorities and future plans around AI adoption.

Quantitative Method

Qualitative Method

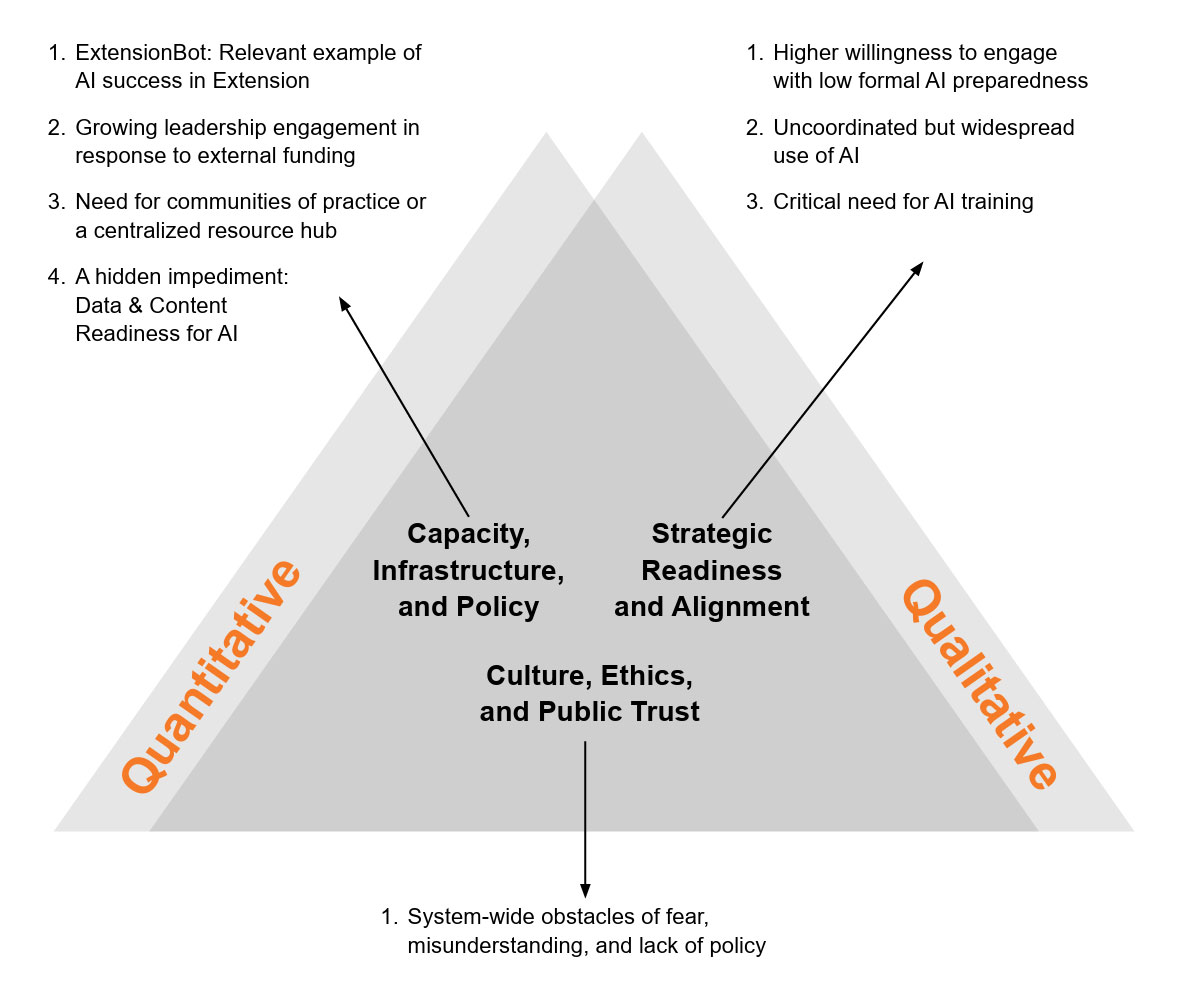

Triangulation of mixed-method data & generation of the three key themes used to inform prioritization steps:

Phase 1

The second phase took place in July 2025 as a series of simultaneous, regional breakout sessions:11 small, structured focus groups facilitated by members of the Extension Foundation staff and the national AI Advisory Board. More than 100 participants representing 41 universities took part in these 90-minute discussions. Following a brief overview of survey results, each group was guided under a single framing question, “Where are we now with AI?” which was explored through related sub-questions addressing current awareness and ongoing initiatives; perceived challenges and risks; and emerging opportunities for Extension and Research. These regional focus groups provided the first qualitative layer of data, illustrating both the promise and fragmentation of AI activity across the Landgrant system. Phase 1 and Phase 2 data were triangulated to draw themes for the prioritization activity.

Phase 2

The second phase took place in July 2025 as a series of simultaneous, regional breakout sessions:11 small, structured focus groups facilitated by members of the Extension Foundation staff and the national AI Advisory Board. More than 100 participants representing 41 universities took part in these 90-minute discussions. Following a brief overview of survey results, each group was guided under a single framing question, “Where are we now with AI?” which was explored through related sub-questions addressing current awareness and ongoing initiatives; perceived challenges and risks; and emerging opportunities for Extension and Research. These regional focus groups provided the first qualitative layer of data, illustrating both the promise and fragmentation of AI activity across the Landgrant system. Phase 1 and Phase 2 data were triangulated to draw themes for the prioritization activity.

Phase 3

Phase 3, held in September 2025, focused on collective prioritization through two sequential 45-minute activities.

Activity 1, Brainstorming: The purpose of this phase was two-fold:

Participants were provided with a synthesis of findings from Phase 2 and asked to generate as many ideas in small groups as possible for how Cooperative Extension and agInnovation could advance AI policy, strategy, and application. Prompts focused on three key areas derived from the initial report:

Activity 2, Prioritization: Immediately following the brainstorming session, participants reviewed the full set of submitted ideas and deliberated to identify their top three priorities within each category. Using a structured worksheet and shared online database, groups collectively determined the nine most significant priorities to guide future implementation.

Phase 4

Between Phases 3 and 4, the facilitation and analysis team used ChatGPT and Google Gemini tools to assist in synthesizing the data collected from brainstorming and prioritization sessions. These tools helped consolidate hundreds of qualitative responses into thematic clusters by identifying redundancy, similarity, and co-occurring concepts across the datasets. The AI-assisted synthesis was then reviewed manually to ensure validity, accuracy, and interpretive rigor.

Participants then self-selected into breakout groups according to their area of greatest interest. Each group was tasked with developing strategies that could move these priorities from concept to practice, focusing on both immediate and long-term actions.

Facilitators guided each group through two key prompts: What strategies and resources are needed to implement these priorities? What barriers exist, and how can they be overcome?

To ensure applied outcomes, participants were encouraged to consider institutional structures, funding opportunities, partnerships, and mechanisms for accountability. Discussions were documented through shared digital forms, with each group’s scribe capturing proposed actions, obstacles, and enabling conditions.

The emerging themes represent the integrated leadership vision for AI within the Land-grant system, connecting ethical alignment, human oversight, workforce development, and shared infrastructure as mutually reinforcing priorities for future action.

During Phase 4, participants reconvened in person to review the top nine priorities including three under each of the key focus areas, and to develop strategies for action. In partially facilitated breakout discussions, participants explored the specific resources and partnerships needed for implementation, barriers that might impede success; and strategies to overcome those barriers and operationalize their ideas within institutional and system-wide contexts.

Qualitative data from Phases 2 through 4 were analyzed using thematic analysis by Dr. Dhruti Patel, supported by AI-assisted narrative synthesis to ensure coding accuracy, redundancies, and data coherence. The analysis consolidated the collective input of participants into two overarching themes: Culture, Ethics, and Public Trust and Capacity, Infrastructure, and Policy. Each theme contained multiple subthemes derived from the coded dataset, reflecting the leadership priorities that emerged through iterative validation during the convenings.

Phase 1

When efforts are scattered, it can cause duplication of work, inconsistent methods, and missed chances for collaborative growth across the larger Extension and agInnovation systems. This highlights the need for a clear, central vision to effectively integrate new technologies within large organizations like ours.

Our quantitative investigation garnered 47 responses from Extension and agInnovation professionals across 29 states, representing a varied crosssection of roles. A significant majority, 61% of those surveyed, held leadership positions, including Deans, Assistant Deans, and Directors.

A more robust, cross-disciplinary engagement could lead to more comprehensive and sustainably funded AI strategies.

Overall, these figures highlight a significant gap in the development, communication, and perceived effectiveness of AI governance within institutions.

The absence of designated roles may impede the proactive development and implementation of robust ethical frameworks and best practices for AI within Extension and agInnovation.

Phase 2

Following the dissemination of the quantitative survey, a qualitative focus group investigation was conducted during the first AI convening virtual meeting with Extension and agInnovation leaders and administrators. One hundred and six individuals representing 41 universities were in attendance.

The following themes emerged from the focus groups.

Phase 3

Following the exploratory discussions of Phase 2, the study advanced into a structured prioritization process designed to convert qualitative insights into clear, actionable focus areas for the Land-grant system.

Phase 4

Thematic analysis of Phases 2–4 revealed three themes: Culture, Ethics, and Public Trust; Capacity, Infrastructure, and Policy; and Strategic Readiness and Alignment. However, because the elements of Strategic Readiness and Alignment were already fully contained within the other two, we consolidated it moving forward and present the findings under the two overarching themes that most clearly reflect participants’ priorities. This consolidation does not remove this content, rather, it redistributes those elements under the two broader themes where they more logically belong. These themes synthesize hundreds of individual responses gathered through focus groups, prioritization activities, and implementation discussions, forming a comprehensive view of where Extension and agInnovation leaders believe coordinated action is most urgently needed.

Leaders across the Land-grant system consistently emphasized that AI adoption must remain human-centric, grounded in ethical principles, transparent practice, and public accountability. Participants warned that technical progress without safeguards could erode both credibility and community confidence.

Subtheme 1. Ethics and Human-Centrism The most widely discussed imperative was to ensure that AI strengthens rather than replaces human expertise. Participants proposed the development of a formal “human-centric” code of conduct led jointly by Extension and agInnovation Directors and Deans. This policy framework would codify expectations for human oversight in all AI-supported research, teaching, and outreach activities. Such a framework would serve as a reference point for how AI tools are used, evaluated, and communicated, ensuring that institutional values and community trust remain central to technological innovation.

Subtheme 2. Attribution and Transparency Leaders identified a strong need for consistent, verifiable attribution of AI contributions in publications and outputs. Respondents recommended standardized attribution guidelines that clearly distinguish where and how AI was used, differentiating between research papers and Extension materials. They also urged expansion of open-access publishing so AI systems can train on transparent, peer-reviewed data. This aligns with federal open-access mandates and reinforces integrity by making AI-assisted work traceable and auditable.

Subtheme 3. Risk Prevention Participants cautioned that unchecked AI adoption could inadvertently displace critical thinking and essential human skills. To prevent this, institutions should frame AI explicitly as a tool that augments human capacity. Continuous professional development and reflective training were identified as the best safeguards against dependence on automated outputs.

Subtheme 4. Public Trust Trust was recognized as the ultimate determinant of success. Leaders called for systematic efforts to measure stakeholder trust and to design AI applications that respect the comfort levels and expectations of end users. Transparent communication, demonstrated accountability, and community involvement in AI design were cited as key to sustaining public confidence in both Research and Extension programs.

The second theme addresses the structural conditions necessary to scale AI responsibly: a capable workforce, collaborative frameworks, and coordinated policy governance. Participants repeatedly emphasized that without shared infrastructure and systematic training, adoption would remain fragmented and unsustainable.

Subtheme 1. AI Workforce Readiness

Training emerged as the single most dominant priority. Leaders proposed a national, tiered AI training program led by Extension, featuring beginner-to-advanced pathways for faculty, researchers, agents, and community audiences.

Key components include:

Participants stressed that training must demonstrate tangible efficiency gains and be embedded within institutional strategic plans to reach beyond early adopters.

Subtheme 2. Collaborative Framework

No single university can meet AI demands alone. Leaders urged the creation of system-wide alliances across the Extension Committee on Organization and Policy, and other partners to coordinate resources and prevent duplication. Examples included joint funding proposals, shared centers of excellence, and cross-state learning communities. The Extension Foundation was identified as a potential platform for distributing resources, webinars, and best-practice repositories that benefit all Land-grant types (1862, 1890, and 1994). Summary

Subtheme 3. Policies and Best Practices

Governance must evolve in parallel with technology. Participants called for institution-wide policies clarifying acceptable AI use in research, teaching, and Extension outputs.

Recommendations included:

Subtheme 4. Infrastructure, Resource Allocation, and Funding

Sustainable AI integration depends on access to physical and financial resources. Leaders underscored the need for shared data repositories, compute capacity, and broadband access, as well as new funding mechanisms to offset training and infrastructure costs. They highlighted public-private partnerships and coordinated grant strategies as essential for building durable AI infrastructure across all institutions, including those with limited internal capacity.

Across both themes, participants voiced a consistent conclusion: policy must lead technology. Human oversight, ethical governance, and coordinated capacity building are prerequisites for AI to achieve its full potential in research and community engagement. AI should not be treated as an isolated innovation but as a system-wide transformation requiring collaboration, investment, and trust.

The AI Convening demonstrated that both Cooperative Extension and agInnovation leaders recognize AI as a defining factor in the future of research, education, and outreach across the Land-grant system. Participants viewed AI not as a single tool, but as an ecosystem-level transformation affecting how information is created, validated, shared, and applied. The discussions revealed broad enthusiasm and optimism about AI’s potential, paired with an understanding that the system’s success will depend on coordinated readiness, ethical governance, and investment in people and infrastructure. The convening sessions offered a clear message: both Extension and agInnovation are poised to take further steps in shaping responsible, mission-driven uses of AI, but doing so will require shared standards, structured collaboration, and a national vision.

AI experimentation is already occurring across the Land-grant system. Faculty, researchers, and educators are independently piloting AI tools for literature synthesis, data analysis, stakeholder communication, and decision support. This decentralized experimentation has generated valuable insights, yet leaders acknowledged that progress remains uneven and largely uncoordinated. Participants agreed that the next phase must focus on structured implementation. Both Extension and agInnovation institutions need frameworks that align experimentation with organizational priorities, create consistency across outputs, and promote cross-state learning and sharing. The challenge ahead is to connect local innovation with national coordination, enabling knowledge to scale without losing institutional flexibility.

A strong consensus emerged that workforce readiness is the most significant barrier to AI adoption. Both Extension and agInnovation rely on a workforce that blends subject-matter expertise with community engagement and applied research. Yet most professionals have not received structured training in AI literacy, ethics, or application. Participants emphasized that a tiered approach to workforce development is needed. Basic digital literacy must be coupled with advanced technical and ethical competencies for specialists, researchers, and educators. This training should not only focus on how to use AI tools, but also on how to critically evaluate AI-generated information, validate data, and communicate transparently with stakeholders.

Both Extension and agInnovation leaders reaffirmed that people must remain at the center of AI integration. Participants were clear that technology should augment human expertise, not replace it. This human-centric approach preserves the credibility, trust, and relational foundation that have defined the Land-grant mission for more than a century. Leaders identified a strong need for shared ethical frameworks, including policies on attribution, data privacy, intellectual property, and algorithmic fairness. A framework developed collaboratively across the system could help ensure that AI tools are used transparently and in alignment with Land-grant values.

Infrastructure disparities emerged as a major concern. While some institutions have advanced data systems and internal AI policies, others are still developing the foundational digital and policy environments necessary for integration. Participants noted that AI readiness requires not only technical infrastructure such as broadband, storage, and computing capacity, but also structured, available, and validated data. Shared data repositories, consistent metadata standards, and aligned policies on availability and governance were identified as key enablers for cross-institutional collaboration. Additionally, with concerns around data privacy and data security, institutions may consider training or educational best practices for those building and using AI applications. These investments would allow Extension and agInnovation to more effectively connect research outputs with educational and applied use cases, creating a unified ecosystem of reliable, AI-ready information.

The findings from the convening underscore that AI readiness represents both a challenge and an opportunity for Cooperative Extension and agInnovation. The decentralized nature of the Land-grant system remains a double-edged sword: it encourages innovation and adaptation, but can also lead to fragmentation if not intentionally aligned. To sustain national leadership in research and community engagement, Extension and agInnovation must advance in tandem. AI has the potential to accelerate the translation of research into practice, improve decisionmaking, and expand access to knowledge for all communities. Achieving this potential will depend on shared investment in training, infrastructure, policy development, and data stewardship.

The AI Convening emphasized that Extension and agInnovation leaders should translate ideas into actionable strategies for building AI readiness across the Land-grant system. The recommendations below represent the most prominent themes identified through the prioritization and implementation sessions. They reflect a shared vision among participants for creating a coordinated, ethical, and sustainable approach to Artificial Intelligence in research, education, and outreach. Additionally, they share a common vision of state Extension and agInnovation systems being a trusted partner for AI in communities nationwide.

Participants emphasized that long-term success with AI requires a foundation of reliable infrastructure, robust policies, and human capacity building. While individual universities have made progress, systemwide coordination remains essential.

Key Recommendations:

Participants expressed a clear need for coordinated national direction to align AI initiatives, investments, and workforce development efforts. Without a unified strategy, efforts risk fragmentation and inefficiency.

Key Recommendations:

The convening underscored that public trust remains central to the Land-grant mission. Leaders agreed that transparency, attribution, and human oversight are critical to maintaining credibility as AI becomes more integrated into education and outreach.

Key Recommendations:

Participants consistently emphasized that collaboration across states, institutions, and disciplines is essential for scale and sustainability. A coordinated systemwide approach will accelerate innovation and prevent duplication of effort. To ensure the longevity of AI initiatives, institutions must align internal resources and pursue external funding that supports infrastructure, workforce development, and innovation.

Key Recommendations:

This AI effort highlighted both the momentum and the complexity of advancing AI across Cooperative Extension and agInnovation. While enthusiasm is strong, meaningful progress will depend on sustained coordination, shared accountability, and clear leadership structures that can carry this work forward nationally.

Participants consistently emphasized the need for a unified, systemwide approach to AI readiness. To guide that effort, the creation of a National AI Program Action Team under the Extension Committee on Organization and Policy (ECOP) is recommended.

This team would serve as a cross-institutional leadership body:

The convening results confirmed the need for systemwide professional development in AI literacy, ethics, and application. Institutions agreed that while many are independently developing training materials, a coordinated approach would improve quality, reduce duplication, and expand access.

A national framework for AI training should:

Based upon feedback from participants, the Extension Foundation can assist with organizing and nationalizing these efforts in collaboration with ECOP, NDEET (National Digital Extension Education Team), and other organizations in addition to providing digital infrastructure for these training sessions. Working alongside content experts from universities, the Foundation can help standardize and distribute scalable training offerings through established platforms, ensuring systemwide availability.

To ensure interoperability across institutions, a coordinated focus on shared infrastructure and data standards is essential. Next steps should include:

As AI use expands, so too does the need for governance frameworks that preserve trust, transparency. Participants recommended:

Ongoing collaboration is essential to maintain momentum and ensure that AI strategies evolve alongside technology. Participants recommended:

As the Land-grant system advances toward a coordinated national strategy for Artificial Intelligence, the Extension Foundation continues to play a facilitative role in supporting Cooperative Extension and agInnovation with tools, infrastructure, and expertise that help translate system priorities into implementation. The insights and priorities emerging from this study align closely with several active NIFA-funded initiatives currently stewarded by the Foundation, particularly those advancing data readiness, workforce training, and AI-informed content infrastructure.

Through the New Technologies for Ag Extension (NTAE) program, the Extension Foundation has developed a portfolio of scalable, interoperable technologies designed to strengthen institutional capacity for AI adoption. Central to this work is MERLIN (Machine-driven Extension Research and Learning Innovation Network), a structured data platform that organizes, validates, and standardizes research and educational content for Extension and agInnovation. MERLIN enables Land-grant universities to prepare their data ecosystems for AI-driven applications by creating structured, shareable, and regularly updated datasets. This ensures that research outputs, fact sheets, and publications remain available, verifiable, and ready for integration with current and emerging AI systems. These efforts directly align with the priorities identified by convening participants, including shared infrastructure, increased availability, and consistent governance for data and content.

Complementing MERLIN is ExtensionBot, the Foundation’s national AI platform that makes validated Extension content discoverable and interactive. ExtensionBot allows institutional partners to customize chat-based interfaces powered by their own data sourced from MERLIN, enabling trusted, real-time responses to stakeholder inquiries.

This approach supports the convening’s calls for workforce development and strategic alignment by providing a tangible, ethical framework for AI adoption. Through these systems, institutions can explore AI use cases, strengthen internal capacity, and demonstrate transparent AI engagement with the public, while ensuring that all outputs remain grounded in peer-reviewed, research-based information.

To further support the national direction recommended in the convening, the Foundation is positioned to collaborate with ECOP, the proposed AI Program Action Team, and networks such as NDEET. These collaborations can help organize, coordinate, and nationalize training offerings that draw on the expertise of Land-grant content specialists, ensuring broad, consistent access to high-quality professional development across the system.

A central outcome of the AI Convening was the systemwide agreement that AI must remain human-centered: a tool to amplify, not replace, the expertise of researchers, educators, and Extension professionals. The Foundation’s tools embody this principle. ExtensionBot requires human validation and content review before public release, while MERLIN’s structure promotes transparency in authorship, attribution, and version control. These features ensure that AI integration enhances, rather than compromises, the trust and credibility that define the Land-grant mission.

From Consensus to Coordinated Action

Artificial intelligence is a defining shift in our world. This study confirms that the Land-grant system is ready to lead the way in navigating this new technology across its research, education, and community engagement missions if we align our policies, training, and data infrastructure around a shared national strategy.

Through a national survey and a series of leadership convenings, this work has moved the system beyond scattered, isolated experiments. We now have a unified vision for moving forward.

The consensus from leaders across Cooperative Extension and agInnovation, representing 1862, 1890, and 1994 institutions, is more than just an observation. It is a clear mandate for action, built on three core priorities:

This study marks a turning point from individual exploration to an intentional, coordinated strategy. The challenge leaders identified is not technical, it’s organizational: how to harness local innovation within a single, national framework.

The essential first step is to create the National AI Program Action Team (PAT). With support from partners like the Extension Foundation, this team will put the plan into action. This group, led by ECOP and agInnovation can provide the leadership needed to align training, develop policy, and coordinate infrastructure investment. The Land-grant system is in a unique position to lead the national conversation about using AI responsibly and ethically for the public good. By adopting a coordinated strategy, the system can ensure AI strengthens human expertise, reinforces public trust, and carries the Land-grant mission into the next century.

Our mission is to empower a national network of community-based educators, volunteers, and partners to turn knowledge into real-world solutions for stronger communities and people.

The Extension Foundation is a nonprofit established in 2006 by Extension Directors and Administrators nationwide. Extension Foundation is embedded in the U.S. Cooperative Extension System and serves on Extension Committee on Organization and Policy (ECOP).

This website is supported in part by New Technologies for Ag Extension (funding opportunity no. USDA-NIFA-OP-010186), grant no. 2023-41595-41325 from the USDA National Institute of Food and Agriculture. Any opinions, findings, conclusions, or recommendations expressed in this publication are those of the author(s) and do not necessarily reflect the view of the U.S. Department of Agriculture or the Extension Foundation.